With rapid advancements in technology, Artificial Intelligence (AI) has emerged as a transformative force across various sectors—including the legal field. In simple terms, AI refers to the simulation of human intelligence in machines, especially in computers and software systems. While AI has brought numerous benefits, it also presents significant challenges—particularly in areas concerning privacy, misinformation, intellectual property, and criminal behavior.

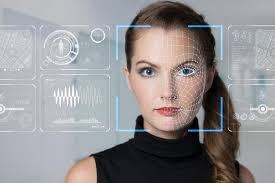

Among these challenges, deepfakes have become a growing concern. Deepfakes refer to AI-generated videos, images, or audio recordings that mimic real individuals, often making them appear to say or do things they never actually did. Despite the increasing misuse of deepfakes in fraud, entertainment, and political manipulation, India currently lacks a dedicated legal framework to effectively address this emerging threat.

Key Threats Posed by Deepfakes

Understanding the risks associated with deepfakes is essential for developing legal and technological safeguards. The primary threats include:

- Defamation and Harassment

– The creation of false and misleading content can severely damage a person’s reputation, leading to emotional distress and harassment. - Financial Fraud and Impersonation

– AI-generated voice cloning and impersonation can be used for scams and unauthorized financial transactions. - Cybercrimes and Identity Theft

– Deepfakes can be used by malicious actors to impersonate individuals and steal sensitive data or personal identities.

Legal Framework Governing Deepfakes in India

India currently lacks a specific statute to address deepfakes directly. However, several existing legal provisions are used to address the harms caused by such content:

- Section 499 & 500 IPC – Criminal defamation laws apply when deepfakes damage an individual’s reputation (punishable by up to 2 years imprisonment).

- Section 123 & 125, Representation of the People Act, 1951 – Posting false political narratives can invite penal consequences.

- Section 66C, IT Act, 2000 – Identity theft via digital means, including deepfakes, is punishable.

- Section 66D, IT Act – Cheating by impersonation using computer resources attracts a sentence of up to 3 years and/or a fine up to ₹1 lakh.

- Section 463 IPC – Forgery involving deepfakes can lead to criminal charges.

- Section 67 & 67A, IT Act – Publishing or transmitting obscene deepfake content, including pornographic material, is punishable with imprisonment up to 5 years and a fine up to ₹10 lakhs.

- Section 292 IPC – Obscene material, including deepfakes, falls under this provision.

- Section 354C IPC – If deepfake content involves voyeurism or violates a woman’s privacy, it is punishable with up to 3 years of imprisonment.

Challenges in Legal Enforcement of Deepfakes in India

- Judicial Challenges

– It is increasingly difficult for courts to distinguish between genuine and AI-generated content. Forensic AI expertise is often required to verify evidence. - International Legal Gap

– Many deepfake sources originate from foreign jurisdictions, complicating enforcement and legal cooperation. - Lack of Awareness and Technical Training

– Law enforcement and cyber units often lack the tools and expertise needed to identify and respond to deepfakes promptly, hampering the investigation process. - Difficulty in Tracing Origin

– The use of VPNs and anonymizing tools makes it hard to trace the actual creators of deepfake content, especially when generated abroad.

Conclusion

Deepfakes represent a serious and evolving challenge in the digital age, with the potential to undermine trust, privacy, and security on multiple fronts. While India has some legal provisions that can be applied to deepfake-related offenses, these are piecemeal and reactive in nature. A comprehensive and proactive legislative framework is urgently needed to specifically address deepfake technologies, ensure accountability, and equip enforcement agencies with the tools required to tackle such threats. As the boundary between real and fake continues to blur, the legal system must adapt swiftly to safeguard individual rights, national security, and the integrity of information in the public sphere.

Contributed by: Aastha Shrivastav (Intern)